1. Question start:

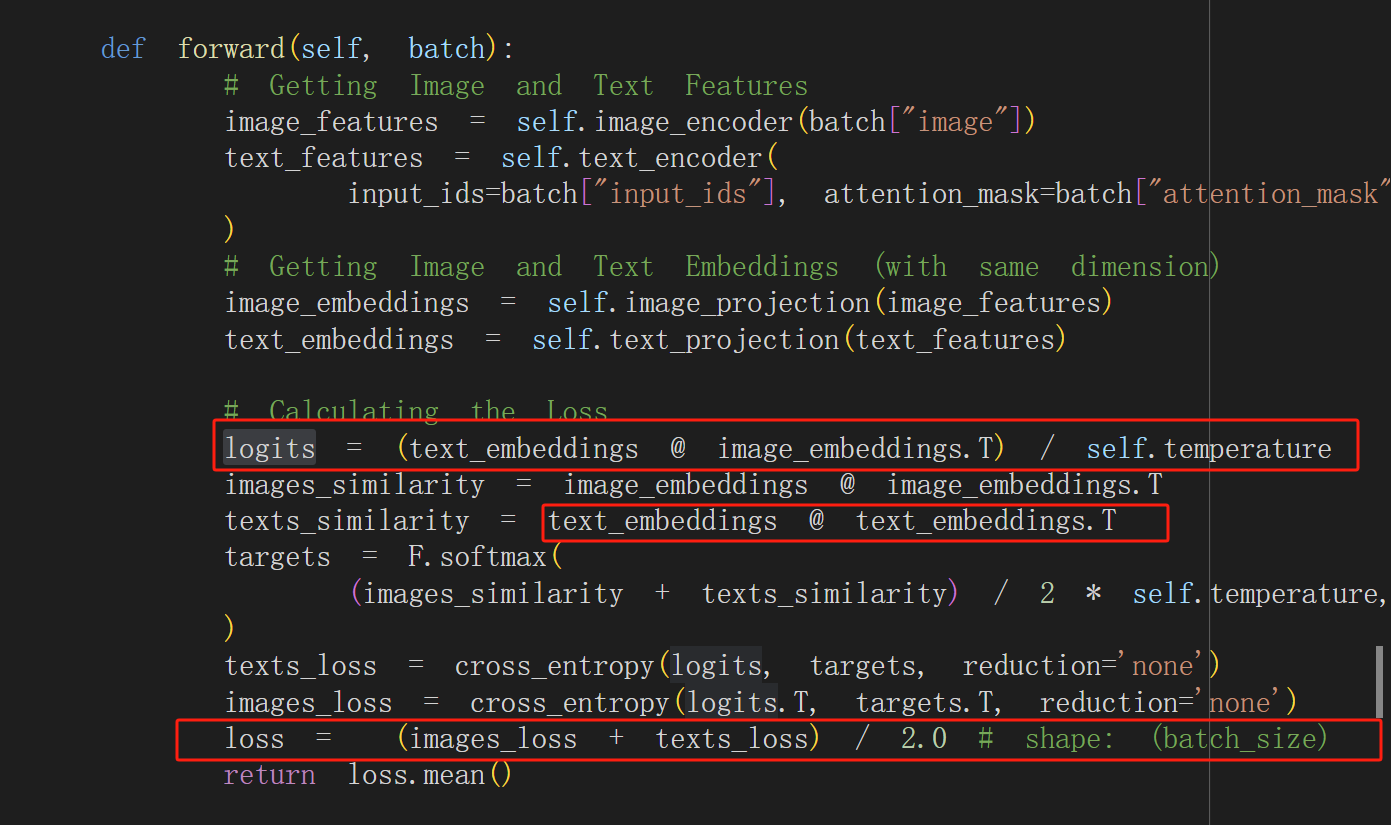

when doing contrastive learning between image and text, one has to project all the embeddings of images and texts into one length space and calculate their dot-product, but while calculating they usually prefer to transpose one another. For example following is the contrastive loss calculation from CLIP training script. This is confusing to calculate the similarity with himself@himself.Transpose and confusing when calculate the total loss with just average of the two loss.

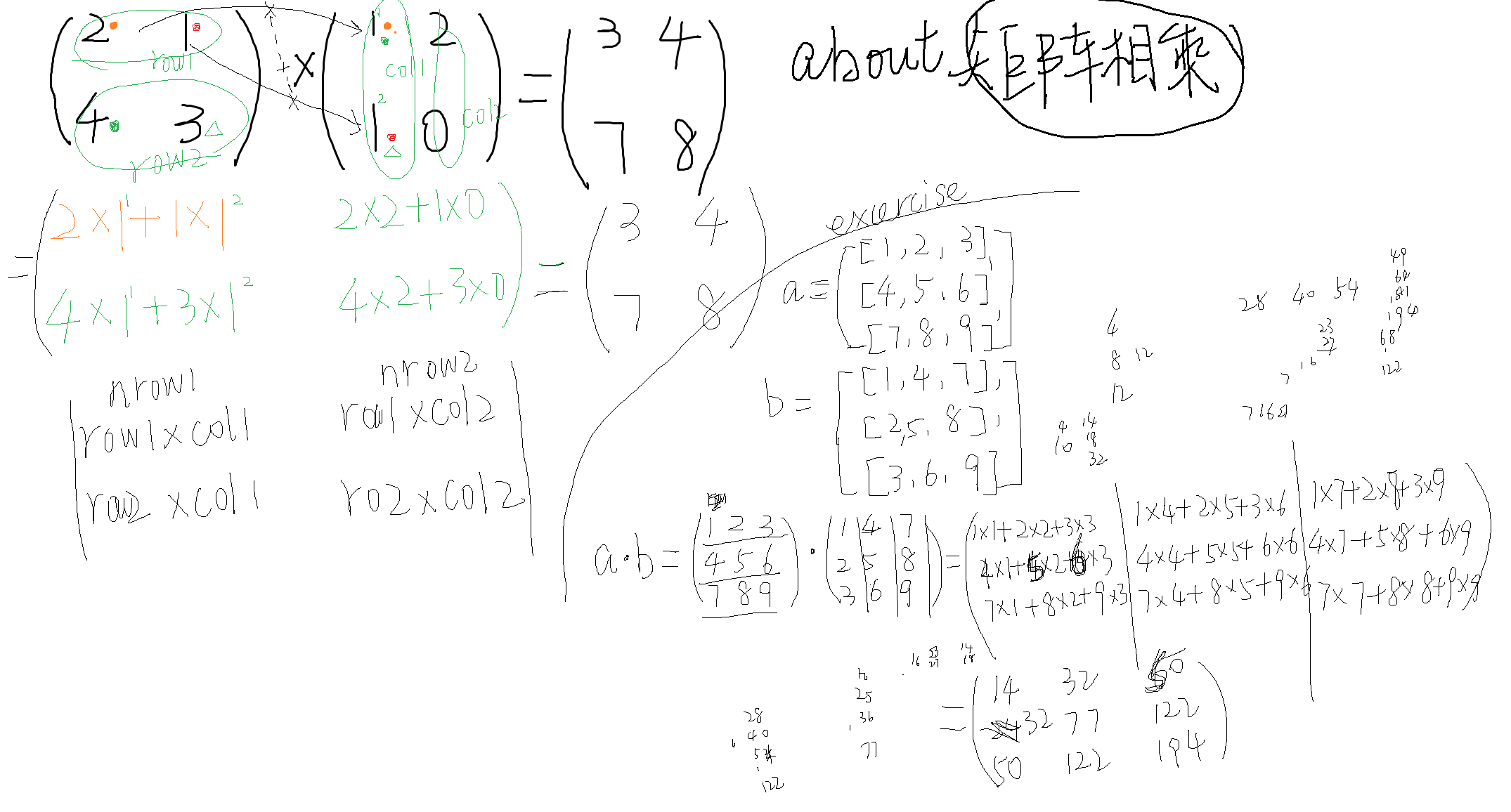

2. What @ means in Python:

##